Integrating AMQP with Apache Spark

Introduction

This application shows how it’s possible to integrate AMQP based products with Apache Spark Streaming. It uses the AMQP Spark Streaming connector, which is able to get messages from an AMQP source and pushing them to the Spark engine as micro batches for real time analytics. The other main point is that the used Apache Spark deployment isn’t in standalone mode but running on OpenShift. Finally, an Apache Artemis instance is used as AMQP source/destintation for exchanged messages.

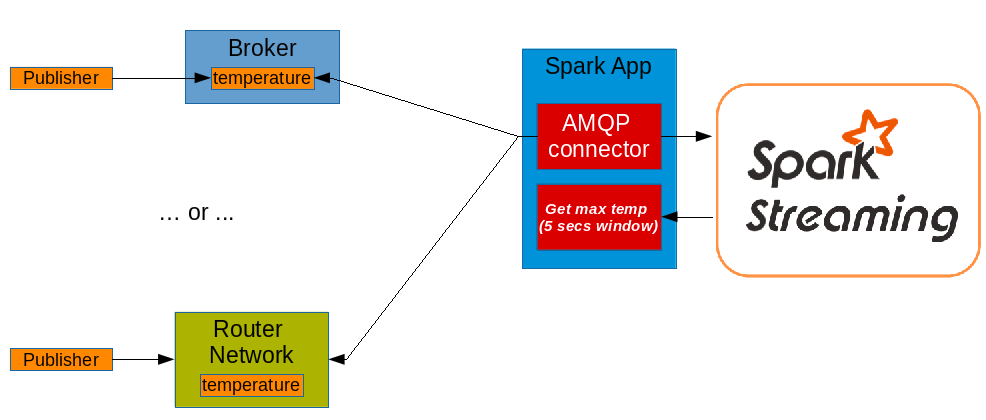

The application consists of a publisher part which simulates temperature values

from a reading sensor and sending them to the temperature queue available on the broker via AMQP.

At same time, a Spark driver application uses the AMQP connector for reading the messages

from the above queue and pushing them to the Spark engine.

The driver application shows the max temperature value in the last 5 seconds.

Architecture

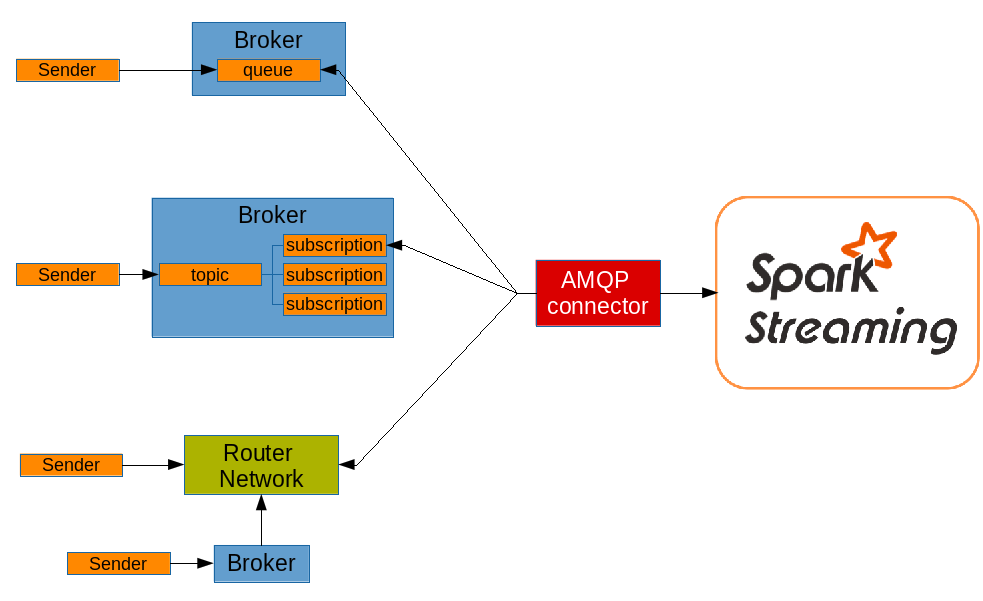

The scenario for using the AMQP - Spark Streaming connector can be described with following picture.

The main components which defines the architecture of the application are :

-

An Apache Spark cluster running on OpenShift

-

An Apache Artemis broker instance for the queue used for exchanging messages

-

The AMQP - Spark Streaming connector for reading that from the above queue providing them to the Spark engine for stream processing

-

A simple client application which simulates a sensor and sends temperature values to the queue using the AMQP protocol

Installation

Usage

Running the application is quite simple and it’s described in the running section of the upstream project here.

Expansion

The user is free to change the application driver in order to apply different Spark operations for gathering different insights (not only the max value) from the stream of temperature values.

Videos

No videos are available for this application.