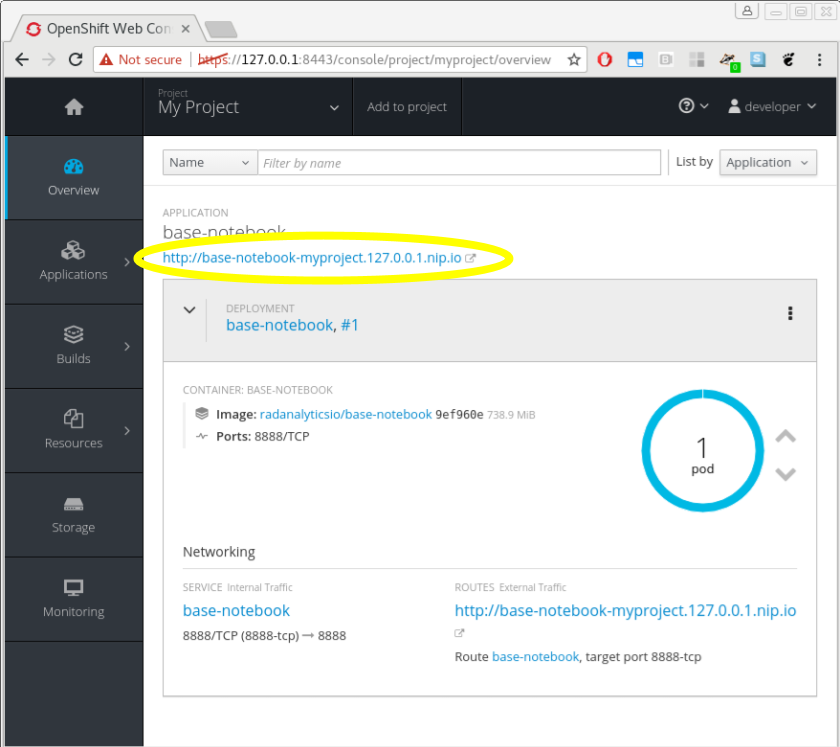

oc new-app radanalyticsio/base-notebook \ -e JUPYTER_NOTEBOOK_PASSWORD=supersecret \ -e JUPYTER_NOTEBOOK_X_INCLUDE=https://radanalytics.io/assets/s3-source-example/s3-source-example.ipynb oc expose svc/base-notebook

Accessing data in S3 with Apache Spark

Introduction

Processing data stored in an external object store is a practical and popular way for an intelligent application to operate.

This is an example of the key pieces needed to connect your application to data in S3. It is presented as steps in a Jupyter notebook.

Architecture

No architecture, this is a connectivity example.

Installation

Start a Jupyter notebook with,

From your OpenShift Console, go to the notebook’s web interface and

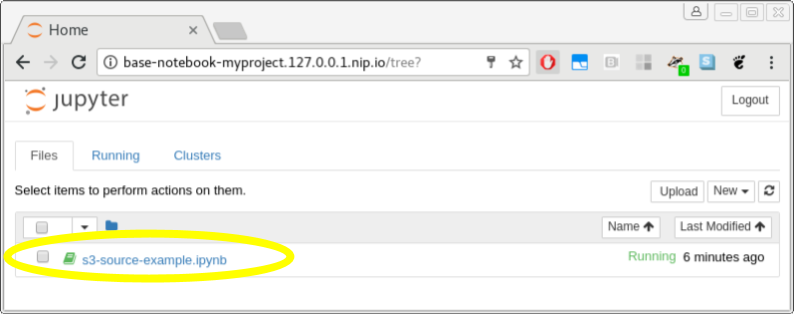

login with supersecret.

Open the notebook and try out the example code.

Usage

No specific usage.

Expansion

No specific expansion.

Videos

No video, follow the notebook steps.